Datafaker dataset generator

[testing CSV kotlin spring jooq datagen programming

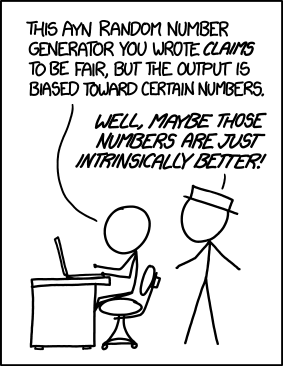

Data generators are not born equal. Source: XKCD

What I wanted to achieve

Integration tests often come with a data challenge: at this test level, we no longer want to have customers with name “Foo” and surname “Bar”, born at the UNIX epoch, you’ve all seen it all before.

The other extreme - using production data directly - might be a nightmare for your other coworkers, namely someone invested in the company’s security.

So, as in many other cases, the middle ground is the direction we want to head towards - anonymized production data or a completely synthetic dataset which at least in some way tries to stay away from customers named “Foo Bar”.

What I initially thought would achieve the goal

| LLM seems to solve all world problems, including (GPU consumption) famine, so data generation would be no problem… right? |

Initially, all my efforts were focused on generating data using strictly LLM, namely Claude Haiku 3.7, which was a state-of-the-art LLM at the time (March 2025).

It seemed like a good use case for LLM, since:

- I already have a full DDL database model in the form of jOOQ generated symbols and Flyway changesets (and these can be fed to as the input, too, if needed)

- from this experiment, LLM proved to be quite good at generating single-table datasets

Enthusiastically, I got to work. All I needed to use was Spring AI integration. I gave Spring access to Claude API using an API key, whose cost, as you might expect, is derived based on used tokens. I was quite pleasantly surprised with token consumption, though - I gave quite a lot of details in the input prompt (DDL, prompt spanning across many paragraphs, …) and the token consumption was still in the order of thousands.

I did not spend more than $2 during my whole-weekend experiment. On the input, there were roughly around ten very simple SQL tables and I wanted to generate tens of rows. This is an excerpt from my API usage log:

| TIME (GMT+1) | INPUT TOKENS | OUTPUT TOKENS |

|---|---|---|

| 2025-03-08 11:50:08 | 4830 | 4096 |

| 2025-03-08 11:49:40 | 4830 | 4096 |

| 2025-03-08 11:49:13 | 4830 | 4096 |

| 2025-03-08 11:48:44 | 4830 | 4096 |

| 2025-03-08 11:48:27 | 4842 | 76 |

| 2025-03-08 11:48:11 | 4842 | 81 |

| 2025-03-08 11:48:04 | 4842 | 407 |

| 2025-03-08 11:47:54 | 4847 | 165 |

| 2025-03-08 11:47:42 | 4847 | 1369 |

| 2025-03-08 11:36:06 | 3783 | 4096 |

| 2025-03-08 11:35:37 | 4037 | 4096 |

| 2025-03-08 11:35:07 | 3864 | 4096 |

| 2025-03-08 11:34:33 | 4044 | 4096 |

| 2025-03-08 11:32:49 | 3834 | 4096 |

| 2025-03-08 11:32:21 | 4088 | 4096 |

| 2025-03-08 11:31:51 | 3915 | 4096 |

| 2025-03-08 11:31:21 | 4095 | 4096 |

| 2025-03-08 11:30:36 | 4001 | 251 |

| 2025-03-08 11:30:15 | 4001 | 1097 |

| 2025-03-08 11:29:50 | 4001 | 3329 |

| 2025-03-08 11:24:27 | 3783 | 4096 |

| 2025-03-08 11:24:06 | 4037 | 3074 |

| 2025-03-08 11:23:37 | 3864 | 4096 |

| 2025-03-08 11:23:09 | 4044 | 4096 |

| 2025-03-08 11:16:05 | 3841 | 1046 |

| 2025-03-08 11:15:35 | 4015 | 4096 |

Notice that the quantities in OUTPUT TOKENS are capped. That’s because

- output tokens are way more expensive than input ones,

- 4096 was the hard cap given by Anthropic at the time.

So… we’re done and can use our Claude dataset, right?

LLM capabilities are not quite there yet, though. To my surprise, most of the data were kind of okay but some issues of the generated data seemed to be insurmountable.

UUID

One of the more suprising issues was UUID. Even though UUID is just a fancy term for a 128-bit number, we’re

all used to using it in the OSF DCE format, e.g. 9c5b94b1-35ad-49bb-b118-8e8fc24abf80.

Claude can recognize when the UUID is correct but has trobles with generating them in the OSF DCE format properly,

even if hinted what the structure looks like; its “favourite” mistake was generating UUIDs with non-hexadecimal

digits in them, e.g. d8e7f6g5-h4i3-j2k1-l0m9n8-o7p6.

This would still be solvable, so I tried not to lose hope.

Foreign key constraints

You might think that quite a mature LLM would crunch this problem without any issues, and in many cases it indeed did, but once I started adding new tables with new FKs, Haiku got completely lost and started hallucinating UUIDs. To my surprise, though, these UUIDs were most of the time at least in the correct format!

At this moment, I started doubting whether LLM is deterministic enough for this task. Inevitably, I would also need to put extra time into writing manual code which would fix the LLM dataset mess.

Hm… maybe manual data synthesis is more practical after all.

Datafaker proved to be the Swiss knife I was looking for!

Datafaker was my next attempt. It’s nothing too crazy - it gives you predefined randomized datasets. You can also define your own.

Since I now had more control over how I’m generating data - as I didn’t have to rely on LLM to definitely give me the same output every time :) - I also decided to implement a few other constraints and met a few different challenges on the way.

I won’t describe the code itself too much as it’s pretty trivial and predictable to what you would expect. See the full source code if you want to dig deeper.

| Language | files | blank | comment | code |

|---|---|---|---|---|

| Kotlin | 25 | 200 | 74 | 1284 |

| CSV | 10 | 0 | 0 | 349 |

| YAML | 3 | 23 | 2 | 130 |

| Gradle | 1 | 29 | 8 | 109 |

| Properties | 1 | 0 | 1 | 3 |

| SUM | 40 | 252 | 85 | 1875 |

CLOC output of the data generation Gradle module. The resulting Gradle module is quite slim compared to the added value it has already given to me.

Static random seed

One of the positive aspects of Datafaker is that you can pass a java.util.Random source to it, which opens a way for deterministic datasets. And that’s exactly what I did!

The heart of the little generator engine is what I call TableRandomizerContext: there is a TableRandomizerContext

for every SQL table and the context has two Datafaker Faker instances:

- PK

Faker, which is - yep, you’ve guessed it - responsible for generating pseudorandom primary keys of the table - Business

Faker, which is used for generating pseudorandom data for other columns of the table

Each table is initialized with a seed which is directly derived from the table name. The reason for having randomizer contexts on the table level is simple: we want to prevent data modification of other tables whenever possible, so when you remove, edit or add a new table, you usually want to keep most of your existing data intact.

data class TableRandomizerContext(

private val pkFaker: Faker,

private val faker: Faker,

private val datasetConfigurationProperties: DatasetConfigurationProperties,

) {

/**

* UUID to be used exclusively by the PK column.

*/

fun TableRandomizerContext.randomUUID(): UUID = UUID(pkFaker.random().nextLong(), pkFaker.random().nextLong())

fun TableRandomizerContext.randomApiaryName(): String =

"${faker.color().name()} ${faker.animal().genus()} Apiary (${

randomBusinessUUID().toString().take(

datasetConfigurationProperties.apiary.apiaryRandomNamePartLength,

)

})"

fun TableRandomizerContext.randomEmail(): String = faker.internet().emailAddress()

fun TableRandomizerContext.randomFrameCount(): Int =

faker.number().numberBetween(

datasetConfigurationProperties.hive.minFrames,

datasetConfigurationProperties.hive.maxFrames,

)

private fun TableRandomizerContext.randomBusinessUUID(): UUID = UUID(faker.random().nextLong(), faker.random().nextLong())

...

}

TableRandomizerContext, written in Kotlin.

The client then uses this randomizer context to generate jOOQ entities, like so:

@Component

class BeehiveDatafaker(

private val generator: JooqCsvGenerator,

private val dsl: DSLContext,

) {

fun apiaries(count: Int): TableGenerationResult<ApiaryRecord> {

logger.info("Generating {} apiary records", count)

val result =

generator.generateRecords(

table = APIARY_,

count = count,

) { index ->

logger.debug("Creating apiary record #{}", index + 1)

dsl.newRecord(

APIARY_,

Apiary(

/**

* THIS is where the randomizer context kicks in.

* It is passed as a receiver of the `generateRecords` callback.

*/

id = randomUUID(),

userId = randomEmail(),

name = randomApiaryName(),

description = randomParagraph(),

established = randomPastDate(),

),

)

}

return result

}

...

}

Tricky data types

Timestamps and geometries proved to be the trickiest columns. Timestamps - as you might expect - require a fixed timestamp which we consider a referential point in time. Geometries were a bit tricky in serializing to WKB (geometry format friendly to PostGIS when it comes to loading data from CSV).

"id","apiary_id","location","name","colony_size","material","frame_count"

4e1b5a02-dc2c-bd7c-1e26-9cf3852ae12a,73d9bbef-a855-7cbd-c530-880abefcf048,01010000000F819F48628A41C00089686C363361C0,Philander calcarifer - orchid - 82832A21,,Bronze,17

e516cd53-cd9f-1518-0566-f2cc034832e8,73d9bbef-a855-7cbd-c530-880abefcf048,01010000007026E827839C3DC0544DD6C9956D2C40,Elephas africanus - tan - 64E16570,10753,Aluminum,17

b1d24a41-ef55-9d90-bacb-b00e807ba80a,73d9bbef-a855-7cbd-c530-880abefcf048,01010000009DC38C5B92C01D409AB8F2E5C78F5FC0,Lagostomus harpyja - indigo - 844AA909,24209,,18

8d1a7c4f-ec20-d34a-89a7-d2243c03b634,73d9bbef-a855-7cbd-c530-880abefcf048,01010000009D5F39E74CA951C0155FA39B73F46140,Tragelaphus aedon - olive - BB72915A,,Copper,14

Refactoring

- Not wanting to give up on Claude completely, I asked it to “implement” the first increment. Living up to my expectations, it was not buggy and did the assigned job; but the code was messy as hell. It seems that Claude just loves using hashmaps everywhere! The code was one big flying spaghetti monster, mutating itself and somehow spitting out the CSV dataset I wanted.

- The next immediate increment was stabilizing output of the monster; basically, playing around with pseudorandomness until it gave me consistent enough results with the same configuration.

- Next, I slowly started refactoring the atrocious code. Logic decoupling, removing hashmaps and inner state, deleting unnecessary logic, …

- I added more thorough logging. Claude again proved to be quite resourceful in this regard.

- Finally, I implemented integration tests which showed performance limitations. Performance was quite poor when I requested datasets in the order of tens of thousands of rows, the generator seemed to choke on OOM, but I didn’t care as generating a few tens to hundreds of rows is usually more than enough. Integration tests helped me polish the code even further without breaking it.

But who knows? Perhaps we’re only one LLM generation away from generating perfect datasets. I’ll definitely keep my Claude’s API key handy…